Get in touch

Think that we can help? Feel free to contact us.

Recently I’ve been having a few conversations with people concerned about the direction social media is taking. Fear, hatred and mis-information seem to be promoted over kindness, mindfulness and rational thought - what exactly is going on?

In July 2019 I kicked my Facebook and Instagram habits. I wrote about my experiences here, where I observed:

So - through no malice - the “bots” will show people posts that keep them stuck to their screens. Posts that aggravate, posts that divide, posts that cause outrage, posts which generate drama. Happy people don’t stay online as much as angry/ sad/ outraged people do. So the “bots” will naturally feed people content that makes them angry/ sad/ outraged.

The “bots” I’m talking about are the algorithms which choose what bits of content people see online. As I recently wrote to some friends:

I’m guessing a lot of the problem is that social media selects the posts it shows people on the very blunt metric of screen time.

Which is like selecting food for a child’s diet based on how much of it they will eat - with similarly disastrous health outcomes.

Thinking about the issues of bot curated content bought me back to a LinkedIn post I made back in 2018 about how far wrong bots can go. As it’s buried in LinkedIn, I figured it was a worth a repost in the current climate:

Originally Published on December 12, 2018

Our online world is run by bots - algorithms that decide what we see & when we see it. Every online search, every social media feed is curated by an army of bots, intent on meeting the goals of their owners - be it more engagement or more sales.

Up until this week I’ve been just an interested observer to the bots laughable attempts to match adverts to my interests, or to their uncanny ability to deliver bang-on search results from trillion of pages of content.

But now I find myself pausing for thought.

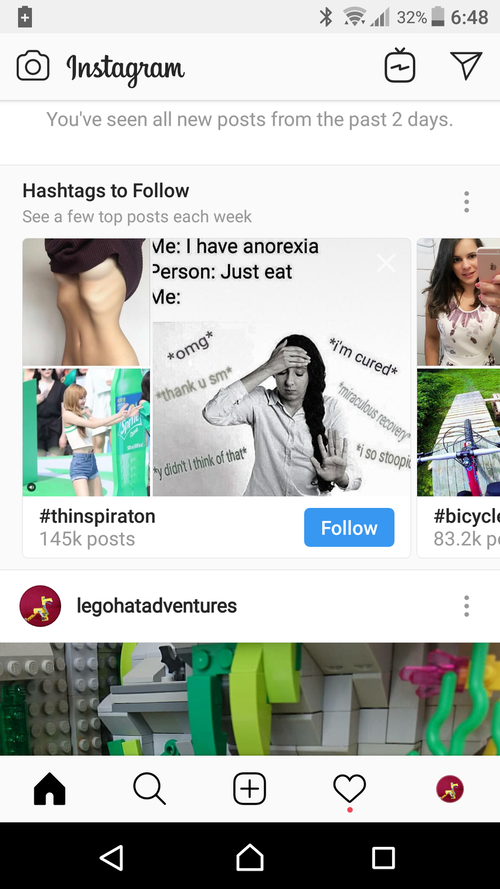

On Monday I was using an Instagram account to put up photos of Lego models built by me & my son. The bots of Instagram (#botsofinstagram) made a suggestion that I should follow #thinspiration:

For those of you who don’t know “thinspiration” is a term used by people who want to be thin as possible. It is connected with anorexia & other eating disorders - especially promoting eating disorders in a positive sense.

And Instagram recommended “#thinspiration” as a “Hashtag to follow” to an account which is 100% pictures of Lego.

My first reaction was “WTF am I seeing this for?”

My second reaction was “This must be some kind of terrible mistake, I better tell Instagram.”

This isn’t some sort of knee jerk moral outrage. I have a few friends who’ve battled with eating disorders, which are serious mental illnesses which can lead to hospital or death. I’ve even visited a couple of those friends in hospital, this isn’t a matter to take lightly.

However, here we are - a social media platform that caters for 13 year olds & upwards is recommending that it’s users follow a tag that promotes eating disorders.

As an IT geek I’m 99.9999% sure that the suggestion came from a “bot” rather than a real person sitting in Instagram HQ manually linking Lego with starving yourself. So before Instagram can suggest more inappropriate hashtags to its user base I set about trying to report it.

First up - see those three dots above the suggestion? They allow you to “Hide” but not “Report”. So off to Instagram Help I go.

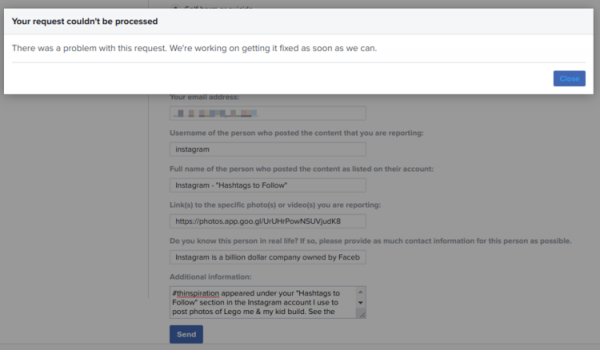

It turns out that the whole of the Instagram support system is designed to report content/ behaviour from other users - not Instagram itself. There is no direct way to contact Instagram & the help section keeps on referring to using the “Report” feature in the app to report inappropriate content from other users. Who guards the guards?

So I attempted to report it using their user report form & got the following response:

This is a multi-billion dollar company owned by the largest social media platform in the world (Facebook) & it seems pretty much impossible to report that it’s bots are suggesting inappropriate content.

After thinking about it I ended up sending my concerns to the eating disorder support groups (ironically) listed in Instagram’s help section. With the hope that they have the ability to talk to a real human being at Instagram. The eating disorder support groups have responded & have all said they will contact Instagram. Hopefully we will see some response from the human employees of Instagram (#humanemployeesofinstagram).

Which brings me back to the bots. 500 million people use Instagram every day & what those people see or experience has been pretty much left in the hands of bots. Instagram seem to trust their bots so much that there isn’t even a way to report that the bots are suggesting people try starving themselves.

Exactly how much faith are we going to put into bots, without humans checks or balances?

What other tags have the Instagram bots been suggesting to users?

The simple answer is “Don’t use Instagram” - but that doesn’t help the 500 million people who’ll use it today. Or the 13 year old who’s been told to try starving themselves by bots, because the bots know best.

In mid 2020 it’s well worth remembering the same bots which mindlessly recommended starvation to me in 2018 are mindlessly recommending conspiracy theories, mis-information, fear and hatred to the general public.

To be honest I don’t know what the solution is to this. We’ve become so reliant on social media as a communication and (mis)information tool I can’t see a mass exodus.

But I think being aware of what is choosing what you read online is a good step towards recognising mis-information for what it is and improving our mental well-being.

Banner Photo by Eric Krull